The Case for Monorepos: A sane Workspace Setup (Part 2)

Learn how to setup dev tooling in a monorepo, run tasks efficiently, release multiple packages and overcome common DevOps challenges

Last time we look at the advantages of monorepos, now we look at tools and get our hands dirty (Photo by Christopher Burns on Unsplash)

In part 1 of the article we have discussed how using a monorepo approach can save you from a lot of headaches when scaling a web-based product from initial MVP to a full scale multi-service app and help you to write your application in a way that keeps its technical complexity manageable over a longer time.

Now let’s have a look how we can set up our typical stack for testing, linting etc. to work best in the monorepo, how to approach common tasks we want to achieve with our setup and how to tackle common challenges that arise when your monorepo grows bigger.

Developer tooling setup

Most of the tools in the modern web development tool belt have some form of monorepo support that typically bring advantages that can be boiled down to one of these categories:

- the possibility of having a shared central config, but also individual configuration per package

- running tasks in parallel for multiple packages for speed improvements (such as testing and releasing packages)

- keeping an overview of dependencies between packages to intelligently cache output of tasks and only run tasks for packages affected by recent code changes

Let’s learn how we can configure popular tools of the web developer stack to leverage their monorepo features:

TypeScript

If you have both backend and frontend services in your monorepo, chances are that both require largely different TypeScript configurations. Depending on what frameworks you are using and how your individual packages are structured, you might need further tsconfig.json adjustments per package. Still you want to share configuration where you can, in order to avoid growing maintenance work with an increased number of packages.

TypeScript has a mode to support this setup called “project references”. When enabled, it treats every package in the monorepo as an individual TypeScript project with its own config. Furthermore, it models dependencies between the packages, which allows TypeScript to only recompile packages that are affected by code changes.

Setting up project references in TypeScript requires a bit of configuration. In short, it contains these steps:

- Add a

tsconfig.jsonconfiguration file in each package that contains TypeScript code. It order to avoid duplicating configs, you can put common shared configs (e.g. for backend services, frontends, tests etc.) in the monorepo root and reuse them in the packages with theextendskeyword. - Add

"composite": trueto thetsconfig.jsonof every package that is referenced by other packages. If you followed the project structure convention in part 1 of this article, this will likely be all packages inpackages/libs. This tells TypeScript to pre-build the package and cache information about it. - Add every TypeScript package from the monorepo that your current package has as dependencies in the

package.jsonas “references” in thetsconfig.jsonof the package. Do this for all packages in your monorepo. - Change your TypeScript command from

tsctotsc --build. Now TypeScript will use the project references.

For more instructions and deeper explanations, how project references work, check out the official documentation page.

If you don’t have too many packages and inter-package dependencies in your monorepo, it is still manageable to add project references by hand, but once your project grows, it can make sense to automate managing your project references. There are tools that can do this work for you, such as @monorepo-utils/workspaces-to-typescript-project-references.

You might also want to add an npm script to build all composite packages in your monorepo at once after the monorepo is installed locally, e.g. in the “prepare” script of your root package.json.

Jest

Similar to TypeScript, Jest also has a “projects” setting that allows it to treat every package in your repository as its own Jest project that can have individual settings. You can set it up like this:

Similar to the TypeScript setup, add a jest.config.js file to all packages that have Jest tests. Again, your package configs can import and reuse shared configs from anywhere, e.g. for frontend and backend services. Additionally, you might want to configure some more things on the package level:

displayName: a short name of your package that is shown during test runs next to the test file namerootDir: the path to your monorepo root, such as"../../..". This allows to collect test coverage for the whole monorepo for instance.roots: determines the paths where Jest looks for test files. Put the path to your package here.

In order to keep the codebase DRY, I would suggest to create a helper function that generates displayName and roots automatically from the directory path and package.json content of a package and reuse that in every package’s jest.config.js.

If you have added all package configs, open the jest.config.js of your monorepo root and add the “projects” field with the paths to all of your package configs. This can contain a glob, such as

projects: ['/packages/**/jest.config.js'].

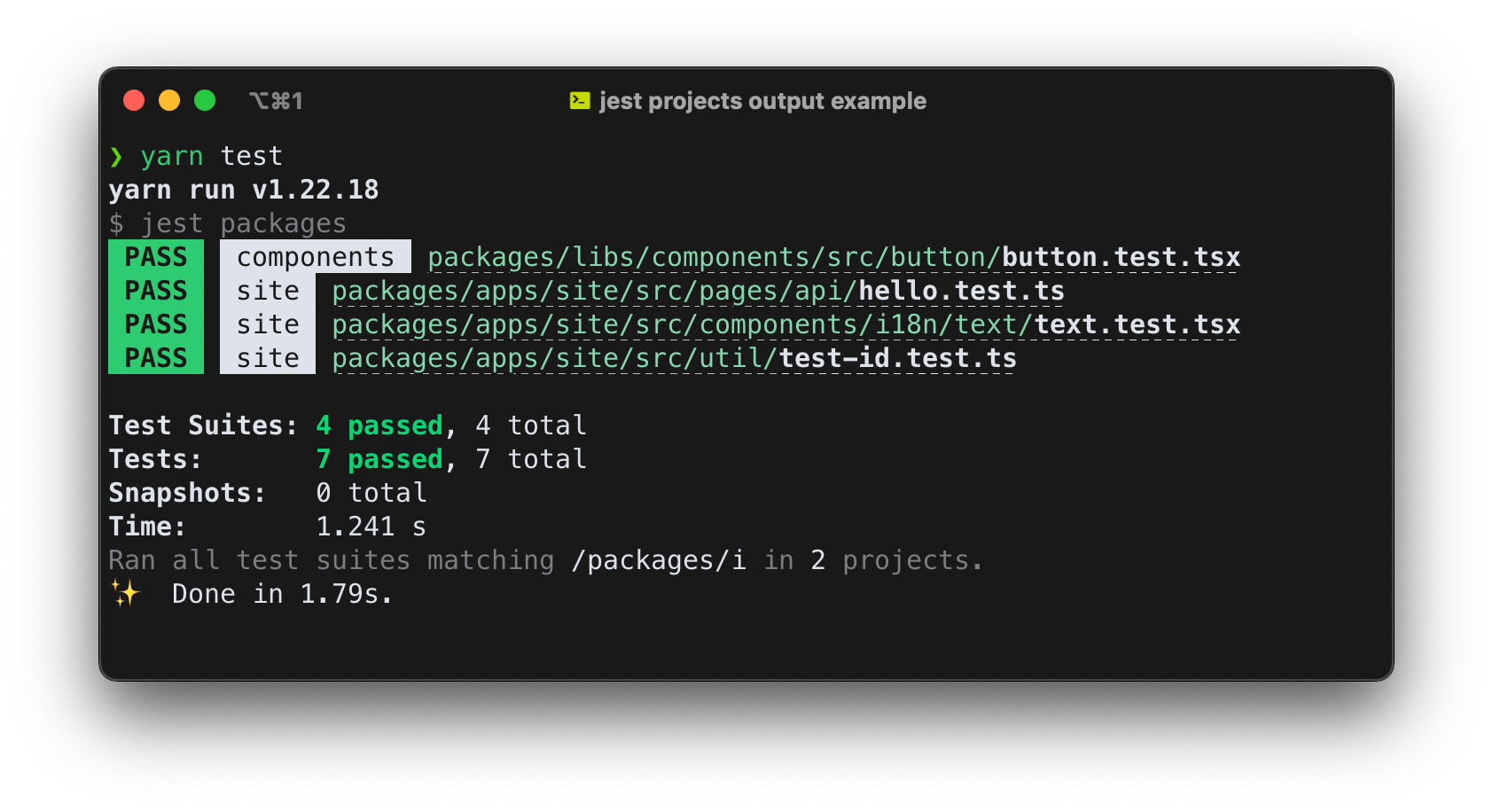

Now Jest treats your packages as individual projects. Another bonus with adding your packages as Jest projects is that it allows Jest to parallelize test runs between packages, which in my case lead to quicker overall test runs for the whole monorepo.

Jest adds the displayName of each project to the test output

Naturally, due to the number of packages and overall easier testability through better separation of concerns that you get with the monorepo approach (see part 1 of this article), you will quickly reach a fairly high number of tests, so execution speed is important.

Running TypeScript test through the default TypeScript compiler through ts-jest turned out far to slow for this case in my experience. I had some very good experiences with using esbuild-jest, which reduced the time for test runs by 90% (!) compared to ts-jest. If you favor using vite as build tool, you might also want to keep an eye on vitest. At the moment, it doesn’t seem to have a comparable option to Jest’s “projects” yet though.

ESLint

ESLint has no special built-in mode for running inside a monorepo, but it features different mechanisms for merging configuration:

- config files can “extend” other configs

- configs in subdirectories have priority over configs in their parent directories

So technically, it would be possible to add individual ESLint configs to all packages in your monorepo and extend shared configs where you need to. However, personally I have always found the ESLint documentation on which of the settings in a config used in “extends” will actually be extended, whether individual settings are merged or overridden and how that plays together with “inherited” configs from parent directories to be intransparent and insufficient. In many cases, it just wouldn’t behave as I expected it to do.

Linting by itself is also a different case than the other tools discussed so far: While you often want to have different TypeScript or testing configs for individual packages due to the different technical foundations of individual services, your coding standards will likely be largely identical across your packages. If you already use TypeScript as the same language for all your monorepo packages, you probably don’t desire drastically different code styles for different packages, no matter if they are frontend services, backend services or libraries.

That is why I usually resort to having only one ESLint config file in the monorepo root and include all monorepo packages in the ESLint task of the root package.

Sometimes, there might still be the need to adjust linting configs in specific places of your monorepo, such as turning of conflicting rules for a certain package or types of files. An example would be a larger monorepo that has Jest tests, but also a package with end-to-end tests written in Cypress. The ESLint plugins for Jest and Cypress don’t play well with each other, because they might want to apply rules for test files of the other tool respectively.

In this case, it’s possible to add additional configuration for individual file name patterns via the “overrides” option in the root ESLint config, where you can specify the path to a certain package, a certain file extension or the likes and override configuration for it.

Additionally, you want to add the attribute root: true to the ESLint config in your monorepo root. This prevents ESLint from searching for config files higher up in your file system.

If you having drastically different TypeScript configs across your monorepo packages, you can also configure the TypeScript ESLint parser to recognize these configs for linting as well. Be aware that this can have a negative performance impact though. In my case it was sufficient to point it to the tsconfig in my monorepo root, which references all packages and is used to build all packages at once.

Bonus Tip: Dependency Management with depcheck

In part 1 of the article we learned how monorepos can provide a better overview of external dependencies, as we can split up a long list of dependencies into smaller ones for the packages where they are actually used.

But even then, we might still encounter issues related to our dependencies:

Installed, but unused dependencies: we might refactor a package to not use a certain dependency anymore (e.g. a 3rd party library), but forget to remove the dependency. While this does not immediately cause any issues, it prolongs your install time, increases maintenance effort and can bring confusion to the team.

Used, but not installed dependencies: the opposite could be the case as well: we are using dependencies in a package where they are not installed. In a single package application, this would be detected rather easily: your application would simply not build or produce an error at runtime, where the dependency is used. In a monorepo however, it is possible that the code still works, even if the package has not added the dependency. How so?

This phenomenon is called “phantom dependencies” and only concerns the package managers npm and yarn v1. If more than one package in a monorepo has added a certain package as dependency, the package managers will then hoist the dependency to the monorepo root package. Simply put, the package is installed in the node_modules directory of the monorepo root directory and not duplicated inside the node_modules directories of the packages that require it. While this is nice in principle, it means that a third package in the monorepo could now use the dependency as well without explicitly having added it as a dependency itself. This can (and ultimately will, especially in bigger teams) lead to nasty bugs in packages of your monorepo, even if the packages themselves were not touched!

Imagine package-a and package-b have defined a dependency on lodash, and package-c does not, but uses it in its code anyway. This would work, until package-b removes the dependency after a refactoring. After a new npm install / yarn install, the lodash dependency would now be moved to package-a/node_modules and package-c would not have access to lodash anymore. Hence, package-c would be broken although there were no changes in it. Good luck trying to figure out why, if you are responsible for package-c and get an error report.

Another case were phantom dependencies are problematic is if your want to publish package-c to make it available outside of your monorepo. In your local monorepo dev setup, everything would work as expected, but once someone else will install your package as a dependency, it won’t (if they haven’t already added the correct lodash version as dependency to their project by chance).

Luckily, both types of issues can be completely avoided. There is an ESLint rule to avoid imports of uninstalled packages, but it only works if you explicitly import the package in your source files. Quite often though, you will have dependencies that are used implicitly, which means you don’t add an import statement for them. This is often, but not only the case with devDependencies. Some examples are:

- Packages that are implicitly provided in an execution environment, such as Jest in a test file

- Packages that are referenced via naming convention, such as ESLint plugins that are

extended in the ESLint config file - CLI tools that you call in an npm script

The depcheck package is a tool that checks your list of dependencies in a package.json against their usage in your code. It can both detect installed but unused dependencies and well as used but not installed dependencies. Furthermore, it understands the conventions of many popular tools such as Webpack, Babel, SCSS and ESLint and can work for these cases too.

In cases where depcheck cannot understand that a package is implicitly used by another dependency and gives a false alarm of unused dependencies (such as in the case of Storybook.js plugins), the dependencies can be ignored in a .depcheckrc config file.

depcheck does not work in a monorepo by default, but you can simply add a .depcheckrc to each of your packages and also the monorepo root directory and then add a task to run the tool inside the monorepo root and in each package. In case of yarn v1, it would look like this:

// in your root package.json:

{

"devDependencies": {

"depcheck": "^1.4.3"

},

"scripts": {

"depcheck": "depcheck && yarn workspaces run depcheck"

}

}Running scripts in your monorepo efficiently

Now that our basic tool set is working inside the monorepo, we can have a look at how to leverage some of the features that come with monorepos to make our lives easier and save us time when managing larger projects.

Let’s go over some scenarios that we are typically facing in a larger project setup and want to automate for increasing levels of project sizes.

Level 1: Running a certain task in multiple packages (if it exists)

This feature is part of all package managers that support workspaces in any way:

# npm v7 and higher

npm run my-task --workspaces --if-present

# yarn v1

# (ensure that my-task is present in all packages or it will fail)

yarn workspaces run my-task

# yarn v2 with workspace-tools plugin:

yarn workspaces foreach run my-task

# pnpm

pnpm run my-task --recursiveFrom my experience, there are not too many use cases, where you actually need this. In code examples across the internet, this is often used with running tests, linting code or publishing packages. For all three cases we have seen options in this article how to handle these tasks intelligently on a global level for your monorepo, which will save you from a lot of configuration in your individual packages. You will mostly need this for tools that don’t have monorepo support out of the box (such as the depcheck example we have seen earlier).

Level 2: Running tasks from multiple packages in parallel

If you have a lot of packages in your monorepo, running certain tasks sequentially in all of them can take a lot of time. If the task doesn’t depend on results from other packages, we can parallelize the execution to speed things up.

There are multiple ways to approach this. Some package managers have this behavior built-in:

# yarn v2 with workspace-tools plugin:

yarn workspaces foreach run my-task --parallel

# pnpm

pnpm run my-task --recursive --parallelIf you are using npm oder yarn v1, there are multiple options to run scripts in parallel:

The first option is Lerna, which has the lerna run --parallel my-task command. Lerna has some more useful features, which we will get back to later, but also requires some additional configuration and slight changes of your typically used workflow and commands.

For simpler use-cases there are packages like npm-run-all and concurrently, which can run multiple npm commands at the same time in a simple way. By default, they don’t work in multiple packages, but we can still make them work. Let’s say we have a task that starts a dev server in some of our packages and want to start up all dev servers at once, we can do something like this:

// assuming you are using Yarn v1, have these scripts in your root package.json:

{

"scripts:" {

"dev:web-app": "yarn workspace web-app run dev",

"dev:payment-service": "yarn workspace payment-service run dev",

"dev:storybook": "yarn workspace storybook run dev",

"dev:user-service": "yarn workspace user-service run dev",

"dev": "concurrently \"yarn:dev:*\""

},

"devDependencies": {

"concurrently": "^7.2.2"

}

}

// then running "yarn dev" will start all dev servers in parallelOf course this doesn’t scale well for a big number of packages, but for a simple monorepo setup it does the job just fine.

For a more complex dev setup with multiple services you eventually might want to use Docker for your local dev setup. In this case it makes sense to add a Docker Compose config that links all your services together.

Level 3: Running tasks only when a dependency changed

The more complex your monorepo grows, the more important it becomes how fast common tasks can be executed. If your test or lint tasks take a very long time, this will multiply with the number of packages in your monorepo, which can have a bad effect on the developer experience pretty soon.

At one point, it might not be enough anymore for the individual tasks to run as fast as possible, you don’t want them to run at all if they don’t need to run.

Say your monorepo consists of 50 packages. You have changed code in one package, which is used by four other packages in your monorepo (as direct or nested dependency). In this case, if you want to create a new build from your monorepo, you want to rebuild exactly five packages (the changed one and the ones that depend on it) and not 50.

If we naively rebuild everything, this might take 10 times as long (or even more, depending on the complexity of the individual packages) and 45 of the rebuilt packages will look exactly the same as before. Also, we need to know in which order our packages must be built, so that every build works. Of course, we don’t want to write any build scripts manually to address this for us, as this would not scale well.

There is software to help with exactly that problem, which will understand cross-package dependencies inside your monorepo, model them as a dependency tree and understand which packages are affected by changes in others. This way they are able to run multi-package tasks only for packages that would generate a different results than before and used previously cached results for all other packages.

As always in the web dev world, you have multiple options here:

Turborepo: Turborepo works on top of the workspaces features of the usual package managers. It adds an extra turbo.json config file, where you define so-called “pipelines”, which define common tasks in the scope of the whole monorepo along with their dependencies. Otherwise, it doesn’t require any changes to your project setup.

An example would be a “build” pipeline, that defines that the “build” task in all packages should have run, if there is one. Turborepo reads the cross-packages dependencies between packages from their package.json files and therefore knows the correct build order and which packages need to be rebuilt based on the latest file changes. The build artifacts are then cached so that they can be used right away the next time a build is triggered. The same goes for the command-line output for tasks that don’t produce any file changes, such as linting.

Pipelines again can be dependent on other pipelines: You might need to build your packages first before your can run your test suite. This is a dependency, which can be modeled in the Turborepo configuration as well. And if now you’re thinking “Hey, that sounds a lot like configuring a CI pipeline!”, you’re exactly right. In fact, you can actually simplify your CI setup by just using Turborepo in your CI right away.

The cache that Turborepo produces when running pipelines can then not only be used on your machine, but also be synced to the cloud, so it can be downloaded and reused on other teammate’s machines or even your CI environment.

A good use case for this is reviewing someone’s pull request: Sometimes just looking at file changes is not enough to properly review a PR, instead you want to check out and test the change locally to be able to fully understand it. If your monorepo is complex and the cache is uploaded to the cloud, when you run a local build after checking out the branch, Turborepo can download and use the cache instead of performing the same build again on your machine, which can be a lot faster. If you don’t like to use Vercel’s cloud service for this, there are open source solutions already that enable to use e.g AWS S3 for storing your cache.

In your CI pipeline this can be especially useful, since common tasks like running tests and building software often takes even more time in CI environments that on your local machine. Cutting down CI pipeline run times can save you real money at this point.

Nx (and Lerna): Both tools are now maintained by the same company and newer versions of Lerna actually use Nx under the hood.

Nx works very similarly to Turborepo. It has all the features explained above, including a cloud service to share caches, but even has some features on top of it: The visualization capabilities of your monorepo dependency graph are more advanced, and Nx also has a generator functionality to bootstrap whole packages of your monorepo, which can be extended with plugins for different popular services. This way you can bootstrap a whole monorepo with a frontend app, backend services, end-to-end test and unit test framework and a Storybook in a matter of minutes. Very nice.

So which one to choose? It really depends on personal preference. Both do a great job in speeding up monorepo workflows. Turborepo is easier to configure, but Nx has a larger feature set. The following video could help you with the decision:

Monorepos - How the Pros Scale Huge Software Projects // Turborepo vs Nx

Fireship on YouTube.com

Jeff Delaney from Fireship.io has made a great video comparing Turborepo and Nx

Versioning and publishing multiple packages in your monorepo

Depending on your project setup, the need might arise to share individual packages of your monorepo with people outside of your project team. While this is more often the case with monorepos of complex open-source libraries, it can happen for as well with monorepos for digital products. This mostly concerns libraries that you have have developed for your product and now want to reuse it for other products as well, such as a library of reusable UI components, clients for 3rd party software such as a CMS or a payment provider or custom shared configuration presets like ESLint or TypeScript configs.

The complexity of setting up a proper package release management workflow inside your monorepo usually grows with the number of packages. If you want to release only one package, the effort is pretty trivial. In the simplest case, you can navigate to the package folder, increase the version and run npm publish.

Usually, a proper release contains more than just publishing a new package version though. You probably also want to:

- Update the changelog of your package to document what’s new in the new package version and if there are breaking changes

- Create a release in GitHub / GitLab with the changelog as well

- Push the changelog and updated

package.jsonwith the new version to git - Tag the release commit with the new version so you can find it again later

- Maybe notify other people that a new version has been released

It’s possible to do all this manually, but even for only one package, you will soon notice that this is annoying and error-prone to do by yourself. Luckily there are tools to automate this for you, such as semantic-release or release-it. Both tools also have the option to automatically determine the new package version for you, e.g. based on your commit messages since the last release, provided that you use a proper commit message convention such as Conventional Commits. Eventually, you might want to run your releases completely automated in your CI, when new code is pushed to your release branch, so that no human needs to interfere with the process.

This workflow is a lot nicer already, but it has a downside: It only works for a single published package in your repository. If new versions are based on version tags and git commit messages in your repo, the tools can’t know which package they concern. The version tags can be adjusted by prefixing the version with the package name, but the commit messages are not so easy, because the tools don’t know which packages are affected by a change. Remember that packages in your monorepo can depend on each other, so a change in one package might affect another package as well.

For this case, we need tools that can actually understand dependencies inside our monorepo. Such tools exist, but most of them don’t have quite the complete feature set as the tools mentioned above to automate a single-package release workflow. There have been multiple attempts to add monorepo logic to tools like semantic-release, but sadly none has resulted in a tool that a widely used and/or still maintained. Instead, other tools have appeared that handle publishing inside monorepos specifically.

Chances are that you want to release more than one package in your monorepo at once (Photo by Ankush Minda on Unsplash)

The first tool that supports releasing multiple packages inside a monorepo is Lerna, which has had this feature for a long time. It lets you choose if you want to synchronize the versions of your packages in your monorepo or if you want to version your packages independently. Lerna can then take care of versioning and publishing your packages with the lerna version and lerna publish commands. You will still need to supply the info yourself, what the new version will be (major, minor or patch increment) and also things like creating changelogs or release commits are not part of Lerna’s feature set.

A tool that can actually create changelogs, determine the next package versions and create release commits inside a monorepo is changesets, so this would be more suitable for running inside a CI environment. The tool has its own workflow to create information about a change that is relevant to the next release, where for each change you specify the type of version increment, which packages are affected and a textual description of the change, which will be added to the repository in Markdown files. From this info, the tool can derive the new package versions when the next release is triggered.

The last tool you want to have a look at when you have a more complex release process in your monorepo is auto, which uses Lerna under the hood but enhances its functionality with determining the next release version and changelog generation. By default, auto uses tags from a pull request to determine the version increment, but there is a conventional commits plugin as well. Additionally, auto has a lot more useful plugins, e.g. one to notify other people via Slack when a new release was published.

Operational challenges in monorepo projects

Finally, let’s also have a look at some DevOps-related challenges that can happen in monorepo projects, especially with larger teams or even multiple teams contributing to the same repository and see how we can address them.

Pull request accumulation

This is not strictly a monorepo-related issue, but happens with any large repository with a larger number of contributors: People will quickly generate a large number of pull requests in a short time, which can slow down the development process, e.g. by people depending on changes from other PRs before they are merged.

Another relevant case of slowdown is using GitLab with a merge method other than “create a merge commit”, which all require the merge request changes to be fast-forward to the target branch in order to merge it. That means that if you want to merge multiple merge requests, after you merge the first merge request, you need to rebase the next feature branch again on the target branch and wait for the CI pipeline to succeed again before you can merge it, and repeat that for all other branches. This can slow down a team significantly. So here, even more than elsewhere, a structured collaboration process is required to ensure, that merge request are kept small and are required and merged quickly, so that they do not pile up.

Docker builds

Even if you don’t use Docker for development, you might want to use it for deploying the services your product consists of.

A monorepo with multiple packages that have dependencies on each other poses a some challenges to the efficiency of a Docker build, because you can’t just simply put a Dockerfile inside one of your packages and build the image in the context of the package directory, because you don’t have access to packages outside of the package directory, as the individual package doesn’t really know that it lives inside a monorepo. So if you want to avoid manually moving code from other packages around (which you should), you can really only build the images for individual services in the context of the monorepo root.

This however means, that you would need to install dependencies for the whole monorepo, which means all packages would need to be included in the image, so any code change in any package would invalidate the cached Docker layers, which then would have to be rebuilt (by installing all monorepo dependencies again).

There are some options to help with this. The first one is using multi-stage builds, where you create a first image that installs all dependencies of the monorepo and then reuse it (locally or on your CI pipeline ) as basis to build your individual services in their respective Dockerfiles. Also, if you are using Nx or Turborepo with their cloud-based build caches, you can use them inside your Docker environment as well to speed up builds, which usually take even longer inside Docker in a CI environment as they do on your local machine.

Still, efficient Docker builds inside a monorepo remain a challenge that require some manual testing, tweaking and including/excluding certain packages for each new monorepo.

Managing secrets for multiple services

Almost all apps or services in your monorepo will eventually need some sort of secret environment variables to run them, which cannot be checked in to the repository in plain text, such as passwords, API keys or access tokens to a private package registries and the like.

A common convention for local development is to put them into .env files that are gitignored. If you have multiple services that all need their own secrets though, how do manage to keep them up-to-date throughout your team?

You probably don’t want all team members to navigate into multiple service folders and edit .env files manually for initially setting up the project on their machines and again if any of these files need to be changed.

What you can do instead, it make these services point to a central .env file in your monorepo root, which contains the secrets env vars for all services required for local development. This central file can either be checked into the repo in encrypted form or provided to the team through a password manager or similar tools.

One option for JavaScript services to support this is to configure the dotenv package to load the env file from a different location than the package directory; a universal and even easier option is to use a plain old symbolic link instead. Symlinks can be checked into git without a problem. You would just gitignore the actual .env file in the monorepo root that they point to.

In practice it would look like this:

# go to your package directory

cd packages/apps/my-service

# create a .env symlink that points to the monorepo root .env

ln -s ../../../.env .env

# stage the package .env file symlink in git

git add .envNow you only need to care to keep one central .env file up-to-date. It is advisable to indicate in your shared central .env file, which env variables are used by which services, either through comments or by prefixing the env variable names accordingly.

Conclusion

So there you have it. We now know how to make common developer tooling work inside a monorepo, how to run tasks more efficiently, including an orchestrated release of multiple packages, and learned about common operational challenges when working inside a monorepo with multiple people.

I hope that I could clear up some of the confusion that you had about using a monorepo for scaling a web-based service over time, so that you have enough information to make an educated decision on your own whether this approach can be a viable option for your next project.