The Case for Monorepos: Scaling Web projects without the Chaos (Part 1)

Some challenges you can expect when scaling web apps from MVP to full-scale multi service apps and how monorepos can help you with that

A well structured monorepo setup can help keeping your project in order (Photo by Niklas Ohlrogge on Unsplash)

Imagine the following scenario: You start developing a new web-based product for your company. As an experienced digital product team, you start with a lean approach, building an MVP with just the core functionality to verify that the product idea is worth building upon.

After the successful first launch, you realize that building the complete product brings a lot more complexity with it: You might need some additional backend services, a marketing website, a shared UI component library and a good amount of tests to maintain trust in your codebase for future refactoring. How do you approach this?

One way how to (not) do it

A seemingly simple approach would be to just add the new features to your existing codebase, right? Just install the packages you need, like

- Jest and Cypress for testing,

- ESLint and Prettier for not having to argue about semicolons and indention in your pull requests,

- Storybook to write your components,

- TypeScript to catch nasty type errors at build time,

- and maybe dockerize everything for consistent developer experience and deployments.

Now you already see the first challenges coming up:

- Your frontend application might need a slightly different build setup than your backend, and your tests and Storybook an even different one. Suddenly you have Webpack, Parcel, esbuild, Babel and their respective plugins as dependencies in your package.json. But which of these dependencies belong to which service and are they actually still used in the project? Hard to say for anyone new joining the project and also for yourself a few weeks later, when the automated security checks in your repo (that you have hopefully set up) report a security vulnerability in one of these dependencies.

- You realize that different parts of your application might need different tooling configuration, like different Jest settings for backend and frontend code, the linting config for your source code might not work well with your Jest or your Cypress test files or your individual services need different TypeScript configs. So you start to put additional config files in subfolders to override the ones in the parent folder. Soon it gets tricky to know which settings are applied exactly for which source code files.

- As you had to bring your MVP to market fast, you haven’t added any tests yet. Now as you start to add them, you realize that your code is actually pretty hard to test because it’s tightly coupled together with a lot of interdependencies between different files. This wasn’t an issue when writing the initial MVP, but now it’s making testing very time consuming, as you realize that you would have to mock a lot of things from files that don’t actually have a lot to do with the code you wanted to test.

- You end up with a lot of Dockerfiles in the root folder of your repository as all of your services (frontend, backend, Cypress, Storybook etc.) have different requirements, some even need a different setup when running in the CI, and you want to keep individual Docker images as small as possible. Also, lately your CI pipeline takes ages to build, because you always have to perform a full

npm installfirst and your package.json is steadily growing with already 70 dependencies in it, which again have a lot of dependencies on their own and might need to perform build steps after installing. This slows you down massively, as your dev team has grown in the meantime to scale the product, and you usually have about 10 pull requests open at the same time, which means either a lot of merging and conflict resolving or a lot of communication effort.

At this point your repository is already so complex, that when you discover you need another backend service, you decide to just create a new repository for it. This will make creating the service a lot faster, right? It is an independent microservice after all, and who knows, maybe it could be useful for another product at some point?

So you get your service up and running, and indeed it went a lot faster than before, but then you realize that things got even more complicated:

- It makes sense to share TypeScript types for your backend endpoint data with your frontend that receives the data, but the types are now in your backend service repository only. What to do about it? Add another repository for shared things and publish them as an internal npm package? Suddenly you have to care about versioning and keeping dependencies in sync between your repos.

- For development environments, you want to run your new backend service together with your existing services, but you can’t simply add a Docker Compose file for both, because you can’t know where the other repo with the Dockerfile is located on other developer’s machines and if it is present at all. What to do about it? Build and publish the Docker image on an internal registry? You absolutely don’t want to do that for every change.

- Somehow your project got a lot more communication intensive again, because now you also really need to take care about versioning and avoiding breaking changes in your backend service, because you cannot deploy your service together with the rest of your application anymore. So if you are not careful, there could be a version of your frontend online at some point, which expects data that the new version of the backend service does not have anymore, which means the site would likely break.

- Lastly, also the work for that new marketing site has started and the team is asking whether you can share that UI component library somehow. Surely they don’t want to implement these components again on their own. Apparently you need to publish and version another package now. But you can’t easily share the components or even extract them to another repository because they have dependencies on your frontend application that you wrote with Next.js but the marketing site team is using Gatsby.

Wow, this has gotten into a real mess, hasn’t it? Somehow all your devs spend most of the time on administrative tasks and arguing about ways of working together instead of actually working on new features. How did we get here? Surely there must be a better way?

Photo by Taylor Vick on Unsplash

A good analogy for this situation is a server cabinet: For a very small setup, just connecting all cables directly on the shortest way will get you going quickly. Once your setup is growing though, this approach can lead to an uncontrollable entangled monster, where no one knows anymore what cable goes where and why, which makes finding the root cause in case of an error or just adding a new connection very complex and time-consuming tasks.

A more sustainable way would be to first think about how different parts of the system should be connected, decide where the cables should go and where not, then group them together, cut them to size and label them nicely.

In our web app, the cables are the dependencies between different parts of the software. Let’s see how we can untangle them by using a monorepo approach:

How do monorepos work?

A monorepo is a single git repository that contains multiple npm packages in a structured way. Packages are essentially subfolders with their own package.json file that are recognized by the package manager and can be added as dependencies to other packages in the same repository. The packages will be linked together automatically by the package manager. These packages can be libraries or applications like a backend service. The individual packages can, but don’t have to be published to an npm registry. An individual package inside a monorepo is often also called a workspace.

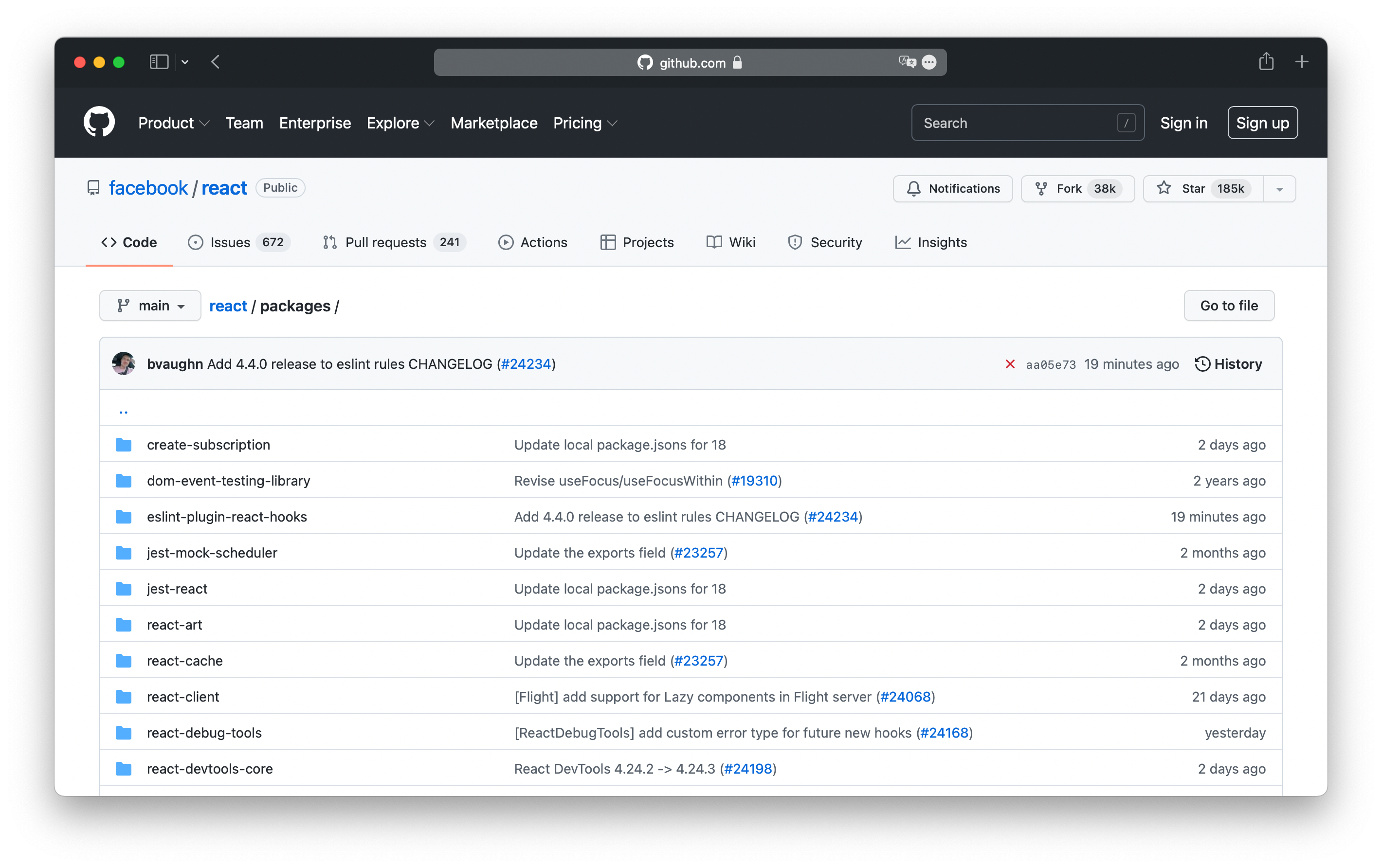

The most popular use cases of workspaces are libraries with optional plugins or tools, which are distributed as individual npm packages. Notable example repositories are React, Babel and Storybook. But you will learn in this article how this setup can also prevent most of the issues in the example scenario above.

The React repository is a monorepo with currently 37 packages

What do I need to set up workspaces?

All popular package managers support workspaces today: Yarn v1 and v2, npm since version 7 (which shipped with Node.js v15) and pnpm.

The implementation of and features around workspaces differ between the package managers. We will be using Yarn v1 for our examples.

There are several other tools that offer additional features for monorepos on top of the package managers, such as:

- Lerna, which can help with versioning and publishing the packages in your monorepo

- Nx and Turborepo, which help speed up multi-package builds in monorepos. Nx can also bootstrap popular services in your monorepo like a React frontend, Express backend, a Storybook and a complete testing setup.

We will cover these tools later. You don’t absolutely need them for getting started with your monorepo, but they can be very helpful once your monorepo grows bigger.

This is only a small selection of tools that can help with managing monorepos. For more advances use cases, the curated Awesome Monorepo list is a good starting point.

An exemplary repository structure

Going back to our example from the beginning, one approach to structure the application could be the following:

(repository root directory)

└── packages

├── apps

│ ├── e2e-tests

│ ├── marketing-site

│ ├── payment-service

│ ├── storybook

│ ├── user-service

│ └── web-app

└── libs

├── cms-client

├── payment-client

├── shared

└── ui-componentsIt would also be possible to just put everything directly into the /packages folder, but distinguishing between apps and libs can make it easier to get an overview what each package does:

- apps: are services that can be built, launched and deployed independently: web apps, backend services, marketing landing pages, documentation websites, or an end-to-end test suite

- libs: can be added as dependencies to apps or other libs

Each package can be initialized like it was in a repository on its own. You create the directory, add a package.json file, install dependencies and add scripts like build, start etc., depending on what the package does.

Additionally, we now add another package.json file in the root directory of the monorepo with the following content:

{

"name": "my-product",

"version": "0.0.0",

"license": "UNLICENSED",

"private": true,

"engines": {

"yarn": "1"

},

"workspaces": [

"packages/apps/*",

"packages/libs/*"

],

"scripts": {},

"devDependencies": {}

}The workspaces field tells the package manager where our packages are located. By setting "private": true, we prevent the root package from being published to the npm registry. After the next time we run yarn in the root directory, we can use the workspace features.

We don’t have any scripts or devDependencies for now; we will add them later in part 2 of the article to create tasks that affect multiple packages, such as running all test suites or publishing all updated packages.

So, how does this help me now?

First of all, just using workspaces alone is no general remedy for all the potential issues mentioned at the beginning of this article. You can still mess up your project with workspaces, but using the approach can enable you to maintain a clean structure of your project, which can help keeping the complexity of your project manageable even when it grows to multiple services that need to work together to enable new full-stack user-facing features.

Here are some of the main advantages:

Advantage 1: Better overview of dependencies

By splitting up our application into multiple packages, we can automatically maintain a better overview of what specific dependencies are used for, as the dependency list of each package will be much shorter than the giant one you used to have before. This already provides some form of documentation without actually having to write documentation. It’s already in the code.

In our example, we can split up the frontend of our application into multiple packages:

web-app: The main frontend application in the framework of your choice, e.g. Next.js.ui-components: UI components used in your application. This package need only a few dependencies: React, something to process styles like Sass or styled-components, TypeScript and maybe 2–3 more packages.storybook: Only has dependencies to build and run the Storybook website, like Storybook itself, some of its plugins and Webpack. The actual Storybook stories remain in theui-componentspackage along the implementation of the respective components.e2e-tests: Contains end-to-end tests for your application and the dependencies to run them, such as Cypress or Playwright.cms-clientandpayment-client: Clients to interact with your backend services, which only need dependencies to fetch data, such as Axios or a GraphQL client.shared: Holds shared code like configuration, translations, Typescript types or test fixtures and might not need any (dev)dependencies at all besides TypeScript.

It’s possible that some of your packages need the same dependencies. In this case you just add them to each package separately. This is fine, because the package manager will automatically centralize and deduplicate dependencies for the whole monorepo in the node_modules folder of the repository root directory. Just be sure to keep the version of the same dependency in sync between the packages to avoid multiple installations of the same package.

Advantage 2: Better reusability

Tools like Storybook allow us to create, test and document our UI components independently from the application where they are used. If we create our UI components in their own independent package, we gain another level of independence from our web-app: Now that the components only have access to the dependencies of their own package, we are not tempted anymore to use implicit dependencies of the web app like components from Next.js or app-specific configuration variables, which would make the components coupled to this one application again. We could only use them by adding Next.js or the whole web app as dependency to our ui-components package, which would feel wrong even to junior developers.

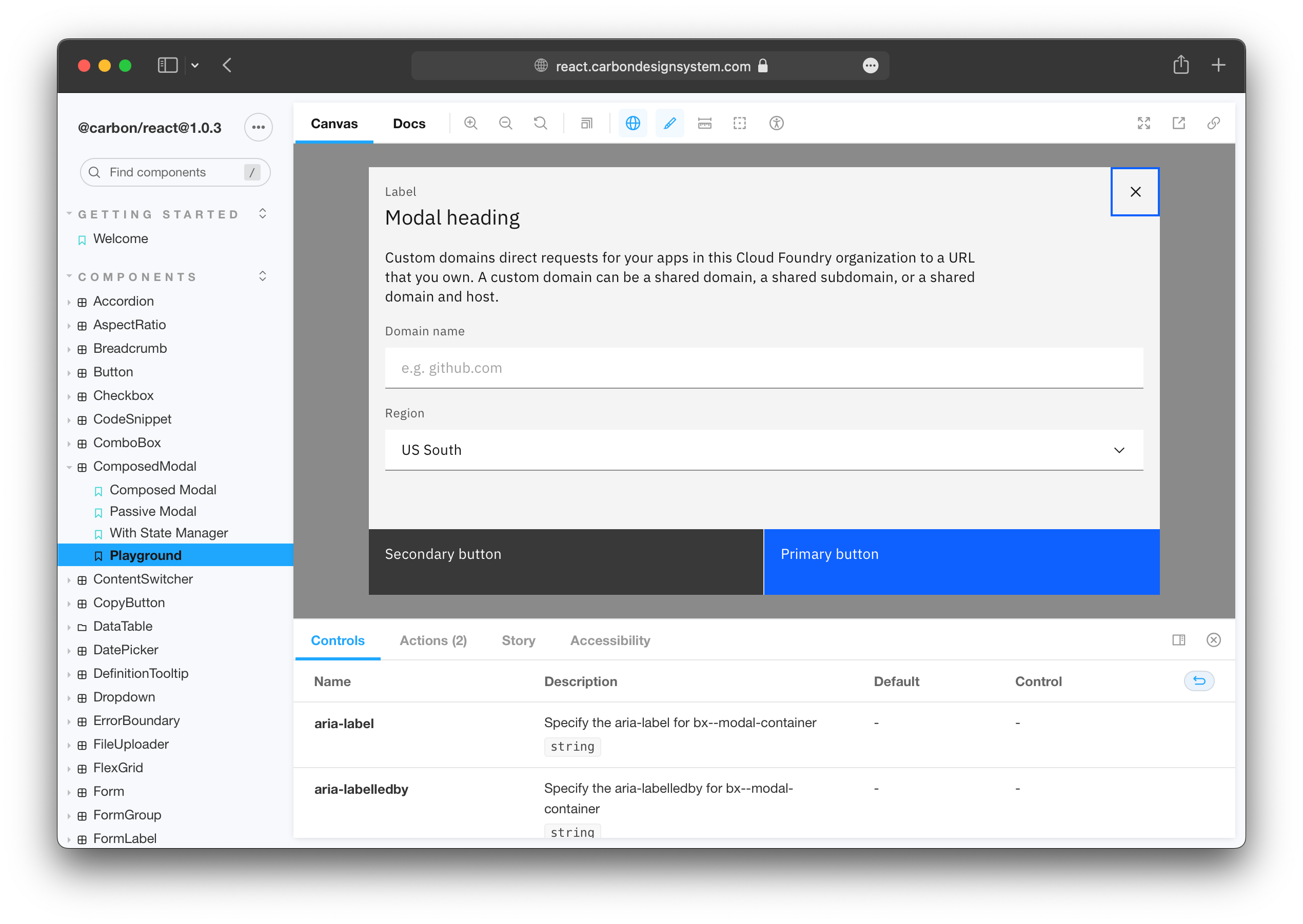

Component libraries can help you write better components, even when they are just used by one application.

Instead, we are forced to develop our components in a way that they work on their own, but can be integrated easily into other applications. This usually improves code quality, as you need to think about the interface of your components more thoroughly: If you have a hyperlink component that needs to work in Next.js as well, forward its ref. If you have an input component that should manipulate state in the target application, allow passing event handlers as props. If you have a component that you want to address in an end-to-end test of your application, allow passing arbitrary props like data-testid.

Now that our component works universally, we can also use it in the marketing-sitepackage without additional effort. As the tests and documentation of the components are centralized in the ui-components package, we save duplicate work. If a completely different application from another branch of our company also wants to use the components later, we could achieve this by publishing the package to a (possibly internal) npm registry.

Since storybook also resides it its own package, we can easily replace it with another tool if the need arises without affecting the ui-components package, or gradually migrate to another tool by just adding another package.

If we still need Next.js dependent components in our Next.js web-app package, we can just keep them directly in that package. This is OK, as we cannot use them in other applications anyway.

Advantage 3: Better decoupling and testability

In the previous example we learned that next to increased reusability, we will generally also design more clearly defined interfaces to the parts of our applications that are extracted into their own packages. This has the additional advantage that it makes testing these packages as well as the applications that use the packages a lot easier. Let’s take an API client package as example:

In a typical web app, we might add data fetching logic directly to our frontend components like so:

import React, { useState, useEffect } from 'react';

import axios from 'axios';

const MyComponent = () => {

const [data, setData] = useState([]);

useEffect(async () => {

const result = await axios(

'https://my.api.service.com/api/v1/my-endpoint',

);

setData(result.data);

});

return (

// ... display data

);

}

export default MyComponent;Maybe we have extracted and imported the API host name from a config file, maybe we use a custom hook for data fetching, but the general issue with this code remains: it’s very hard to test.

In order to test the data fetching logic, the test needs to know how the data fetching works to mock the configuration and maybe the request itself. Also, as data fetching is part of the component lifecycle, our test environment must be able to render the component to test the data fetching. Therefore writing this test will need some additional dependencies for rendering UI and mocking. This is possible, but it can already be that much effort that it’s tempting to just not write the test at all. And even if you do, you might need to update your test regularly when you change something in the component, as the test is dependent on implementation details of your file.

Now if we extract data fetching into an API client package for each specific API we are using in our app, we can make things a lot easier:

Our API client package only needs to expose an init function that takes some configuration like an API key and then returns an object or class instance with data fetching methods that each can optionally take arguments and return a promise with some data:

// in packages/apps/web-app/src/payment-client.ts

import { createPaymentClient } from '@my-product/payment-client';

import { paymentConfig } from './config';

export const paymentClient = createPaymentApiClient(paymentConfig);

// in packages/apps/web-app/src/component/my-component.tsx

import React, { useState, useEffect } from 'react';

import { paymentClient } from '../payment-client';

// ...

useEffect(async () => {

const paymentStatus = await paymentClient.getPaymentStatus(userId, orderId);

// ...The app that is using the client now doesn’t need to know how it works internally anymore: Whether it’s using REST, GraphQL or RPC; where the requests go to and how the responses look like, it all doesn’t matter. To test how our component handles the API data, we now only need to mock one API client method, which is far less work. The tests for the actual data fetching logic would happen in the API client package already.

Advantage 4: Atomic full-stack deployments

Adding new features to a full-stack application usually requires changes and additions in multiple places: We might create new backend endpoint, update the documentation (like an OpenAPI specification file), update the frontend to query the new endpoint, process the data and save it in the client state, then display it in a new UI component with some fresh graphic assets.

Depending on our organization structure, this can optimally be done by one full-stack team alone. If the code for all affected services is in the same repository, the entire feature can be implemented with a single pull request (or merge request, if you are a GitLab user). This means that the entire full-stack feature can be tested, built and deployed to production end-to-end in one go. With preview deployments, the interdisciplinary team can do code and design reviews and perform manual testing, if needed.

Of course you need a CI setup that supports all these features. This is a very complex topic on its own, and the implementation can vary drastically depending on your CI provider and deployment setup, so we cannot go into greater details here in the scope of this article.

The effort is worth it though, as you can largely avoid version conflicts between your service deployments and situations like “Pull request #452 in repo A can only be merged after #78 in repo B and #113 in repo C” , which introduce unnecessary waiting time and communication overhead to your team.

Conclusion

In this article, we presented some issues that can arise when scaling web applications from MVPs to full scale multi-service apps. We introduced workspaces as a way to avoid implicit dependencies and unmanaged complexity, which can contribute to these issues, ultimately leading to applications becoming unmaintainable as they grow.

Based on an exemplary web app, we outlined a high-level project setup using a monorepo and showed some of its advantages for teams that develop and grow web-based applications in the long run.

Check out part 2 of the article to learn

- how to configure popular tooling like TypeScript, Jest and ESlint in a monorepo

- how to save time running tasks for multiple packages

- how to release individual packages in your monorepo

- how to overcome typical challenges in a monorepo setup